Online Fast Adaptation and Knowledge Accumulation: a New Approach to Continual Learning

Résumé

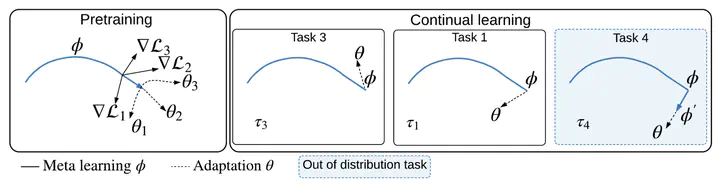

Continual learning studies agents that learn from streams of tasks without forgetting previous ones while adapting to new ones. Two recent continual-learning scenarios have opened new avenues of research. In meta-continual learning, the model is pre-trained to minimize catastrophic forgetting of previous tasks. In continual-meta learning, the aim is to train agents for faster remembering of previous tasks through adaptation. In their original formulations, both methods have limitations. We stand on their shoulders to propose a more general scenario, OSAKA, where an agent must quickly solve new (out-of-distribution) tasks, while also requiring fast remembering. We show that current continual learning, meta-learning, meta-continual learning, and continual-meta learning techniques fail in this new scenario. We propose Continual-MAML, an online extension of the popular MAML algorithm as a strong baseline for this scenario. We empirically show that Continual-MAML is better suited to the new scenario than the aforementioned methodologies, as well as standard continual learning and meta-learning approaches.