Trustworthiness

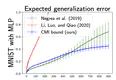

Deep ensembles offer consistent performance gains, both in terms of reduced generalization error and improved predictive uncertainty …

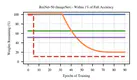

Recent work has explored the possibility of pruning neural networks at initialization. We assess proposals for doing so: SNIP (Lee et …

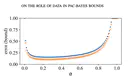

The dominant term in PAC-Bayes bounds is often the Kullback–Leibler divergence between the posterior and prior. For so-called …

In suitably initialized wide networks, small learning rates transform deep neural networks (DNNs) into neural tangent kernel (NTK) …

In requiring that a statement of broader impact accompany all submissions for this year’s conference, the NeurIPS program chairs …

Recent work has explored the possibility of pruning neural networks at initialization. We assess proposals for doing so: SNIP (Lee et …

The information-theoretic framework of Russo and J. Zou (2016) and Xu and Raginsky (2017) provides bounds on the generalization error …

We provide a negative resolution to a conjecture of Steinke and Zakynthinou (2020a), by showing that their bound on the conditional …

To date, there has been no formal study of the statistical cost of interpretability in machine learning. As such, the discourse around …

We propose to study the generalization error of a learned predictor ĥ in terms of that of a surrogate (potentially randomized) …