Abstract

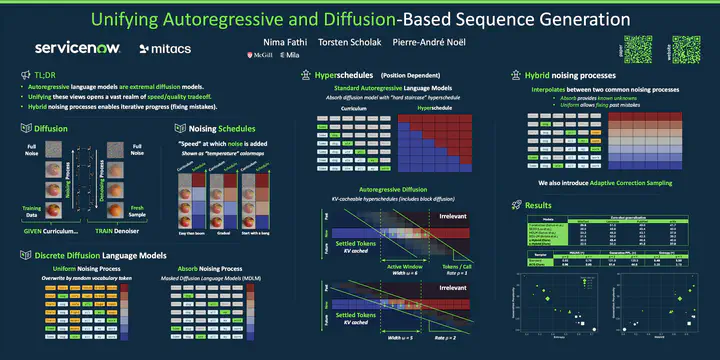

We present significant extensions to diffusion-based sequence generation models, blurring the line with autoregressive language models. We introduce hyperschedules, which assign distinct noise schedules to individual token positions, generalizing both autoregressive models (e.g., GPT) and conventional diffusion models (e.g., SEDD, MDLM) as special cases. Second, we propose two hybrid token-wise noising processes that interpolate between absorbing and uniform processes, enabling the model to fix past mistakes, and we introduce a novel inference algorithm that leverages this new feature in a simplified context inspired from MDLM. To support efficient training and inference, we design attention masks compatible with KV-caching. Our methods achieve state-of-the-art perplexity and generate diverse, high-quality sequences across standard benchmarks, suggesting a promising path for autoregressive diffusion-based sequence generation.