Breaking the Bottleneck with DiffuApriel: High-Throughput Diffusion LMs with Mamba Backbone

Abstract

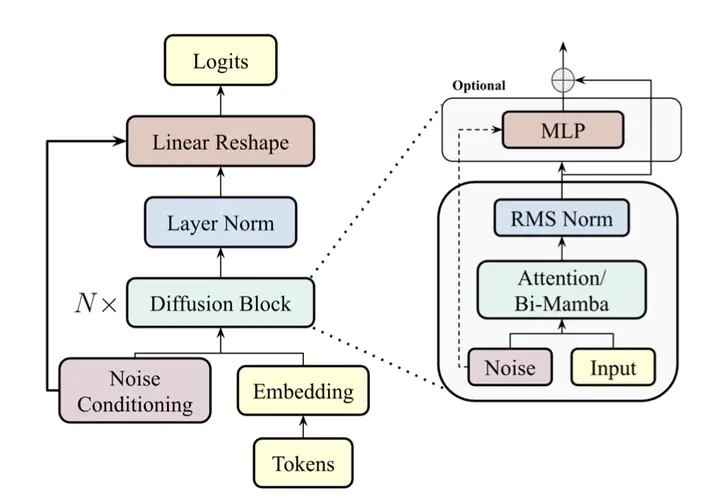

Diffusion-based language models have recently emerged as a promising alternative to autoregressive generation, yet their reliance on Transformer backbones limits inference efficiency due to quadratic attention and KV-cache overhead. In this work, we introduce DiffuApriel, a masked diffusion language model built on a bidirectional Mamba backbone that combines the diffusion objective with lineartime sequence modeling. DiffuApriel matches the performance of Transformerbased diffusion models while achieving up to 4.4× higher inference throughput for long sequences with a 1.3B model. We further propose DiffuApriel-H, a hybrid variant that interleaves attention and mamba layers, offering up to 2.6×throughput improvement with balanced global and local context modeling. Our results demonstrate that bidirectional state-space architectures serve as strong denoisers in masked diffusion LMs, providing a practical and scalable foundation for faster, memory-efficient text generation.