DualChronos: Context-Aided Time Series Forecasting with Dual Modalities

Abstract

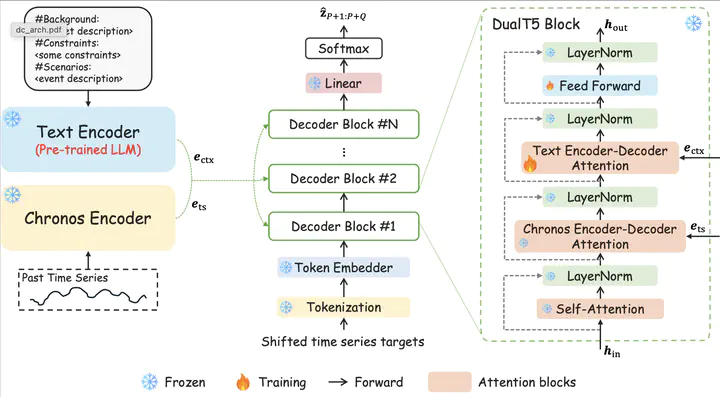

The dynamics of complex systems often depend heavily on external context, and natural language is an intuitive medium for practitioners to convey that context. However, leveraging such context to adapt forecasts remains challenging due to the modality gap between text and numerical data. Recent multimodal time-series foundation models have begun to bridge this gap and improve accuracy, yet they fall short of demonstrating how context actively reshapes their predictions. A significant obstacle is the scarcity of datasets in which there is a strong association between context and temporal dynamics. To address these limitations, we introduce DualChronos, a dual-encoder time-series foundation model explicitly designed to fuse natural-language context with numerical inputs. By pretraining on a large corpus of scenario descriptions generated via large language models, DualChronos learns meaningful alignments between context and temporal dynamics, enabling forecasts that adapt directly to contextual cues.