Guarantees

Embodied agents face significant challenges when tasked with performing actions in diverse environments, particularly in generalizing …

The dominant term in PAC-Bayes bounds is often the Kullback–Leibler divergence between the posterior and prior. For so-called …

In suitably initialized wide networks, small learning rates transform deep neural networks (DNNs) into neural tangent kernel (NTK) …

The information-theoretic framework of Russo and J. Zou (2016) and Xu and Raginsky (2017) provides bounds on the generalization error …

We provide a negative resolution to a conjecture of Steinke and Zakynthinou (2020a), by showing that their bound on the conditional …

We propose to study the generalization error of a learned predictor ĥ in terms of that of a surrogate (potentially randomized) …

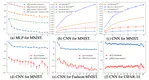

We study whether a neural network optimizes to the same, linearly connected minimum under different samples of SGD noise (e.g., random …

Pruning is a well-established technique for removing unnecessary structure from neural networks after training to improve the …

We study whether a neural network optimizes to the same, linearly connected minimum under different samples of SGD noise (e.g., random …

In this work, we improve upon the stepwise analysis of noisy iterative learning algorithms initiated by Pensia, Jog, and Loh (2018) and …