Abstract

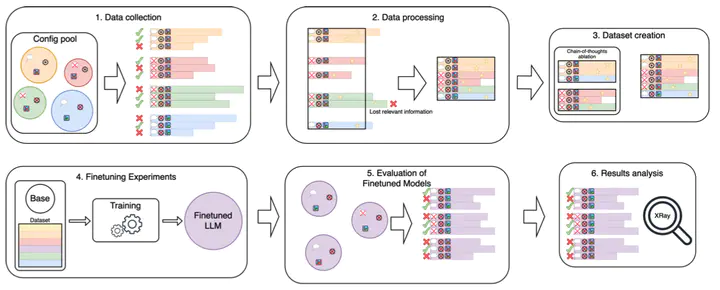

Recent advancements in large language models (LLMs) have spurred interest in developing autonomous agents capable of performing complex tasks in a human-like manner. Despite progress, even the most advanced models often face challenges with robustness across benchmarks, while smaller, open-source models lag in performance. This study introduces a novel merging-based fine-tuning approach to enhance the capabilities of smaller, cost-efficient LLMs by combining agentic fine-tuning with instruction-tuning, using successful traces from stronger models as a guide. We outline a comprehensive pipeline for data collection, filtering, and supervised fine-tuning, examining key behavior cloning parameters. Our experiments reveal that simply predicting expert trajectories does not consistently improve task performance, highlighting issues like catastrophic forgetting and the loss of reasoning abilities. To address these challenges, we propose AgentMerge, model merging using agentic vectors as a solution, demonstrating its effectiveness in improving generalization and mitigating forgetting. Additionally, we provide an open-source codebase and a 140M-token dataset for the research community.