Abstract

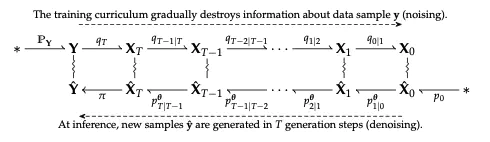

We take significant steps toward unifying autoregressive and diffusion-based sequence generation by extending the SEDD discrete diffusion framework with two key innovations. First, we introduce hyperschedules, which allow different positions within a sequence to be subjected to distinct noise schedules. In our formulation, standard autoregressive models (e.g., GPT) and conventional diffusion models (e.g., SEDD) emerge as two extreme cases of this broader design space. Second, we propose a hybrid token-wise transition matrix that interpolates between the absorbing and uniform processes, thereby combining the commitment of masking with the flexibility of uniform token replacement. In addition, we develop efficient training and inference strategies by employing custom attention masks that facilitate KV-caching, despite inherent architectural challenges. Extensive experiments on benchmark datasets demonstrate that our approach not only achieves state-of-the-art performance in terms of perplexity but also produces high-quality, diverse generated sequences. These results open promising avenues for further research in autoregressive diffusion-based sequence generation.