Large Language Models

Building reliable computer-use agents requires grounding: accurately connecting natural language instructions to the correct on-screen …

Language model activations entangle concepts that mediate their behavior, making it difficult to interpret these factors, which has …

Diffusion-based language models have recently emerged as a promising alternative to autoregressive generation, yet their reliance on …

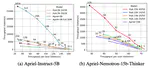

We introduce multi-token prediction (MTP) variants of

the Apriel model family, designed to generate multiple to-

kens per forward pass. …

Large Language Models achieve their success through transformer architectures with attention mechanisms that compute token …

Reinforcement Learning (RL) is increasingly utilized to enhance the reasoning capabilities of Large Language Models (LLMs). However, …

UI grounding is a fundamental task for enterprise workflow automation. This task maps natural language instructions to precise pixel …

We present significant extensions to diffusion-based language models, blurring the line with autoregressive ones. We introduce …

We present WebMMU, a multilingual benchmark that evaluates three core web tasks: (1) website visual question answering, (2) code …

We introduce a framework for optimizing domain-specific dataset construction in foundation model training. Specifically, we seek a …